Assisting the Comprehension of Intention versus Reality

Software does exactly what the developer told it to do — including when it fails. The reason that it sometimes fails is that it was told to do the wrong thing — in other words, it contained bugs created by the developers. Professor Jim Jones and his students conduct research to help address such bugs and thus ultimately improve the quality of software. As Jones puts it, “One way to define a bug is as a gap between human intention and implementation reality, neither of which are easy to identify or define. Understanding what the program is doing during execution and deployment can involve extensive research. Similarly, defining exactly what should be happening — what you want to happen — is often a process of discovery and iterative modification.” Making both intention and reality more visible and discoverable is a common theme of Jones’s and his students’ research.>

To this end, Jones is conducting a multifaceted research agenda. One area that Jones is investigating is field testing of mobile applications. Along with his Ph.D. student Yang Feng, they conducted studies of the testing practices of crowdsourced field testers for mobile phone applications. They found that such beta-software testers often submitted bug reports that were less descriptive, in terms of textual descriptions, and more richly descriptive, in terms of screenshots, than was found for more traditional desktop software. Such a finding may not be surprising, given the relative ease of taking screenshots on a mobile device and the relative difficulty of typing long-form, descriptive text. However, this characteristic creates difficulties for several automated testing and triaging softwareengineering tools. For example, automatic bug-report duplicate detection tools traditionally rely on similarity of the words that are used to describe a failure. These tools can suggest to developers that a cluster of bug reports may be describing a similar bug, and the developer can use this information to identify especially problematic field failures, attribute a severity level to the class of bug reports, and assign developers to investigate and fix the bugs.

However, for mobile applications where the text descriptions are often too sparse, Jones and his team found that they can leverage the relatively rich set of screenshot images to benefit developer understanding and automated triaging. Using techniques borrowed from the computervision domain, screenshots from mobile applications can be analyzed and utilized to benefit testing efforts. Screenshots for mobile applications are often more welldefined (e.g., more consistent aspect ratios, consistent theming, no overlapping windows) than traditional desktop software, and as such analysis of these screenshots becomes more tractable.

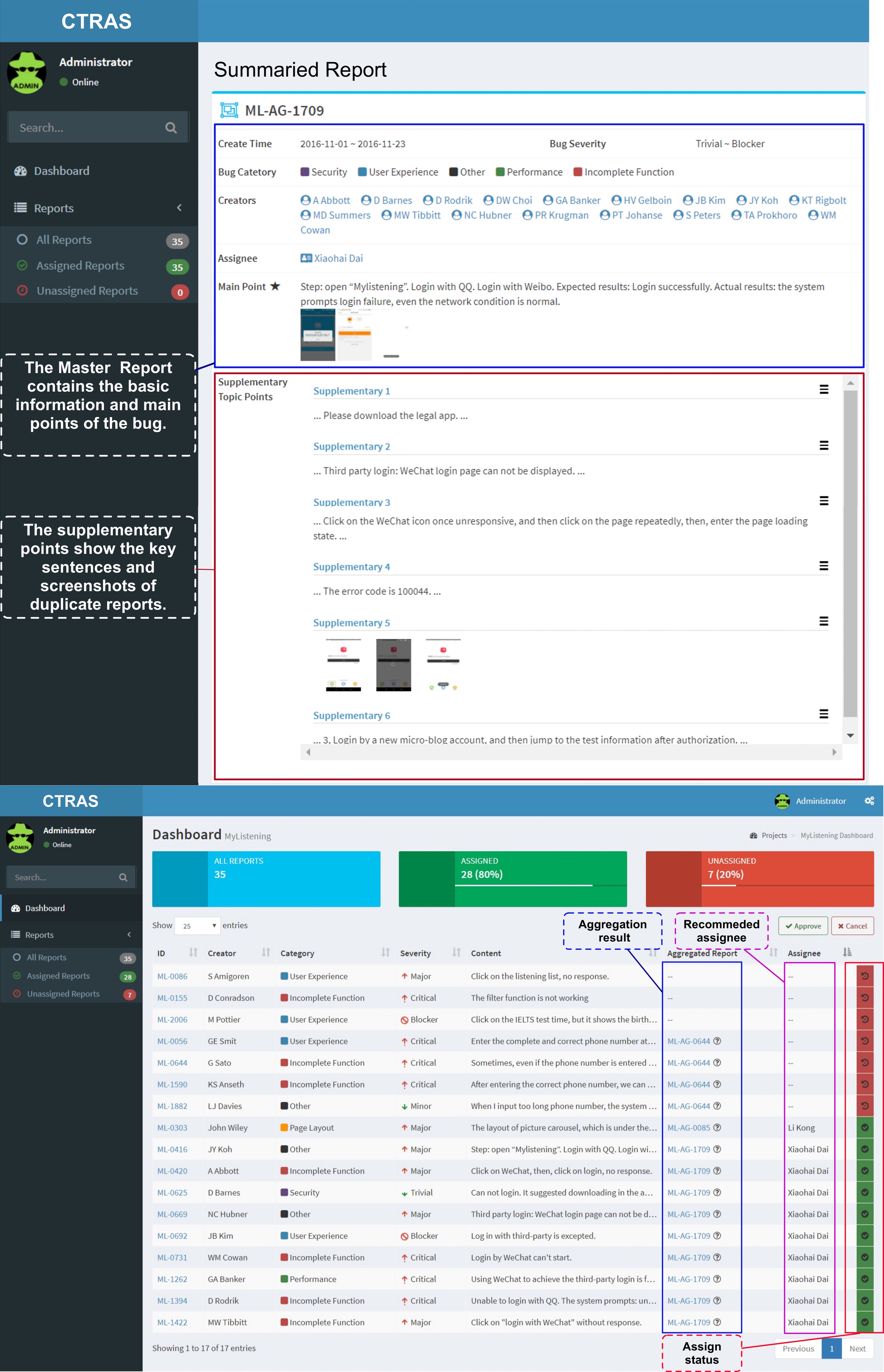

So Jones and team developed a number of tools that target the mobile application testing domain, specifically crowdsourced or field testing, to make the reality of software behavior in the field more easily understandable and manageable for developers and testers back at their development organization. One such effort is a tool called CTRAS (Crowdsourced Test Reports Aggregation and Summarization), which is a novel approach to identify and leverage duplicate bug reports to enrich the content of bug descriptions and improve the efficiency of inspecting these reports. CTRAS is capable of automatically aggregating duplicates based on both textual information and screenshots, and further summarizes the duplicate test reports into a comprehensive and comprehensible report. It can automatically generate high-level summaries to highlight the most prevalent aspects of the group of similar bug reports. With these groupings of bug reports, the developer is then able to take action on the group of bug reports as a whole — e.g., attributing severity levels and assigning developers to investigate and fix the bugs. Jones’s team hopes that the use of such tools may encourage different behavior from crowdsourced field testers — instead of discouraging the submission of duplicate bug reports (which is common in practice for traditional desktop software), testers will be encouraged to submit their failures in the hopes that such redundancies can help provide a more holistic and descriptive view of the bugs.

So Jones and team developed a number of tools that target the mobile application testing domain, specifically crowdsourced or field testing, to make the reality of software behavior in the field more easily understandable and manageable for developers and testers back at their development organization. One such effort is a tool called CTRAS (Crowdsourced Test Reports Aggregation and Summarization), which is a novel approach to identify and leverage duplicate bug reports to enrich the content of bug descriptions and improve the efficiency of inspecting these reports. CTRAS is capable of automatically aggregating duplicates based on both textual information and screenshots, and further summarizes the duplicate test reports into a comprehensive and comprehensible report. It can automatically generate high-level summaries to highlight the most prevalent aspects of the group of similar bug reports. With these groupings of bug reports, the developer is then able to take action on the group of bug reports as a whole — e.g., attributing severity levels and assigning developers to investigate and fix the bugs. Jones’s team hopes that the use of such tools may encourage different behavior from crowdsourced field testers — instead of discouraging the submission of duplicate bug reports (which is common in practice for traditional desktop software), testers will be encouraged to submit their failures in the hopes that such redundancies can help provide a more holistic and descriptive view of the bugs.

Another thrust of work to help bridge the gap between developer intention and software reality is a research project that aims to provide high-level abstractions and visualizations of software execution. Understanding what happens during execution can be a daunting process — millions of instructions can be executed in the blink of an eye, and logging all instruction executions can produce trace files in the gigabytes. To help developers understand what is happening during execution, Jones and his Ph.D. students Kaj Dreef and Yang Feng are working on providing interactive and understandable representations of software execution. These research efforts utilize a number of technologies (including machine-learning clustering, data-mining, frequent pattern mining, information-retrieval labeling, and information visualization) to take extremely large execution trace files and produce an explorable interface that allows developers to see multiple levels of abstraction of software behavior. Their tool called Sage provides a visualization that reveals a timeline of execution, in terms of behavioral phases — each phase can be “unfolded” to reveal sub-phases. Jones and team hope that such tools can help developers understand the dynamic behavior of their software, and thus be able to diagnose problems, fix bugs, and identify performance bottlenecks.

Another area of interest is the popular and challenging area of testing for distributed systems. Jones and his Ph.D. student Armin Balalaie are creating a system called SpiderSilk that provides a testing framework for distributed systems. After years of development, distributed systems can still malfunction, lose data, and be inconsistent in certain scenarios and during environmental failures. These failures are often caused by race conditions and environmental uncertainties, e.g., partial network failures and improper time synchronization. Due to the lack of testing frameworks, current distributed systems cannot be tested against these failures in an automated way. To address this problem, SpiderSilk is a novel testing framework specifically tailored for distributed systems which can inject environmental failures, impose order between different nodes’ operations for testing concurrency and race conditions, and do assertions that are based on internal states of different processes.

Jones believes that there is a never-ending need for greater comprehension of software — both the developers’ intention of what it should do and the reality of what the software is actually doing. As software becomes more prevalent in our everyday lives and is responsible for more of our functioning as a civilization, and as it grows larger and more complex, such comprehension becomes ever more important.

For more information on Jones’s research, see his homepage: http://www.ics.uci.edu/~jajones and his research group’s website: http://spideruci.org.